By Fernando Marmolejo-Ramos, Tim Simon and Rhoda Abadia; University of South Australia

In early 2023, OpenAI’s ChatGPT became the buzzword in the Artificial Intelligence (AI) world. A cutting-edge large language model (LLM) that is part of the revolutionary generative AI movement. Google’s Bard and Anthropic’s Claude are other notable LLMs in this league, transforming the way we interact with AI applications. LLMs are super-sized dynamic libraries that can respond to queries, abstract text, and even tackle complex mathematical problems. Ever since ChatGPT’s debut, there has been an overwhelming surge of academic papers and grey literature (including blogs and pre-prints) both praising and critiquing the impact of LLMs. In this discussion, we aim to emphasise the importance of recognising LLMs as technologies that can augment human learning. Through examples, we illustrate how interacting with LLMs can foster AI literacy and augment learning, ultimately boosting innovation and creativity in problem-solving scenarios.

In the field of education, LLMs have emerged as powerful tools with the potential to enhance the learning experience for both students and teachers. They can be used as powerful supplements for reading, research, and personalised tutoring, benefiting students in various ways.

For students, LLMs offer the convenience of summarising lengthy textbook chapters and locating relevant literature with tools like ChatPDF, ChatDOC, Perplexity, or Consensus. We believe that these tools not only accelerate students’ understanding of the material but also enable a deeper grasp of the subject matter. LLMs can also act as personalised tutors that are readily available to answer students’ queries and provide guided explanations.

For teachers, LLMs may help in reducing repetitive tasks like grading assignments. By analysing students’ essays and short answers, they can assess coherence, reasoning, and plagiarism, thereby saving valuable time for meaningful teaching. Additionally, LLMs have the potential to suggest personalised feedback and improvements for individual students, enhancing the overall learning experience. The caveat, though, is that human judgement is to be ‘in-the-loop’ as LLMs have limited understanding of teaching methodologies, curriculum, and student needs. UNESCO has recognised this importance and produced a short guide on the use of LLMs in higher education, providing valuable insights for educators (see table on page 10).

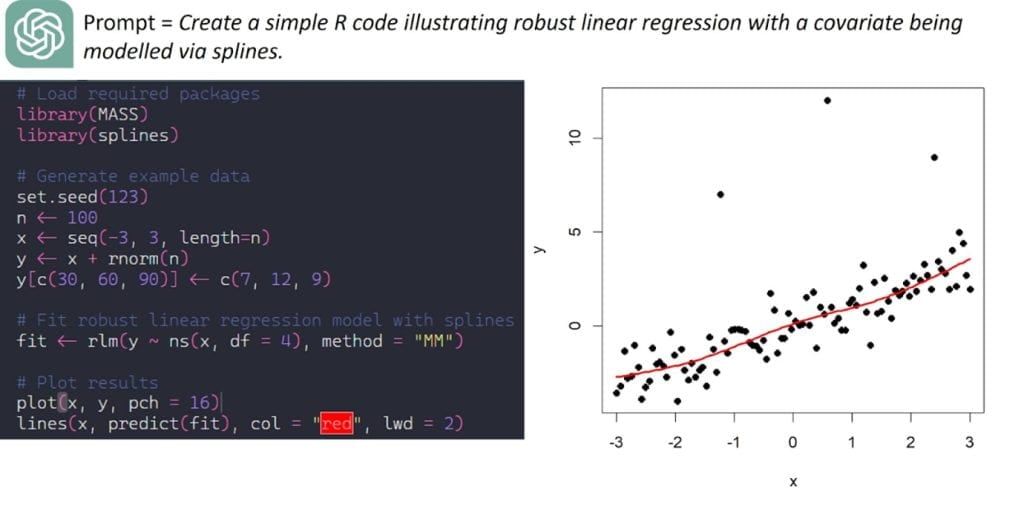

Achieving remarkable results with LLMs is made possible through the art of “prompt engineering” (PE) – a term referring to the art of crafting effective prompts to guide these language models towards informed responses. For instance, a prompt could be as straightforward as “rewrite the following = X,” where X represents the text to be rephrased. Alternatively, a more complex prompt like “explain what Z is in layman’s terms?” can help clarify intricate concepts. In Figure 1, we present an example demonstrating how students can use specific prompts to learn statistical concepts while simultaneously gaining familiarity with R coding.

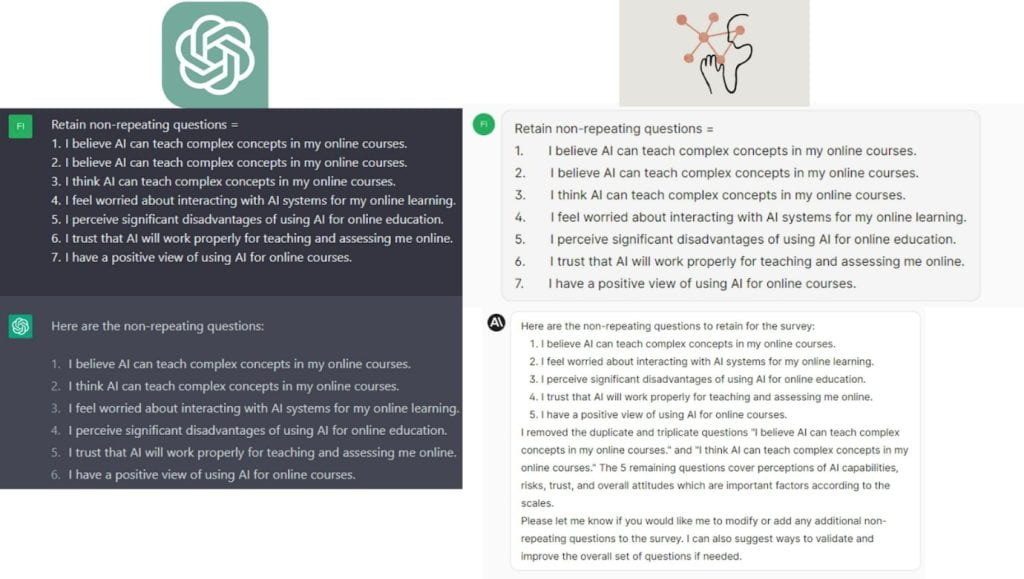

Additionally, Figure 2 reveals that not all LLMs offer identical responses to the same prompts, highlighting the uniqueness of each model’s output.

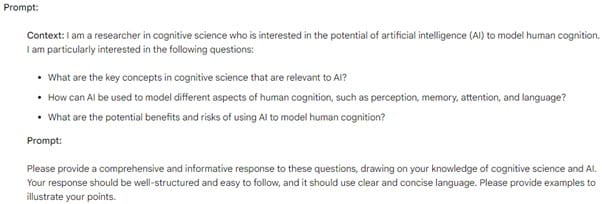

However, the most interesting aspect of PE lies in formulating appropriate questions for the LLMs, making it a matter of problem formulation. We believe this crucial element is at the core of effective prompting in educational contexts. Seen this way, it’s clear that good prompts should have context for the question being asked, as context provides reference points for the intended meaning. For example, a teacher or student could design a prompt like: “Given the information in texts A and B, produce a text that discusses concepts a1 and a2 in text A in terms of concepts b1 and b2 in text B”; where A and B are paragraphs or texts given along with the prompt and a1, a2, b1 and b2 are specific aspects from texts A and B. Admittedly, that prompt lacks context. Nonetheless, context-rich prompts could still be conceived (see Figure 3). These examples also hint at the idea that prompts work in a “rubbish prompts in; rubbish responses out” fashion; i.e. the quality of the prompt is directly proportional to the quality of the response.

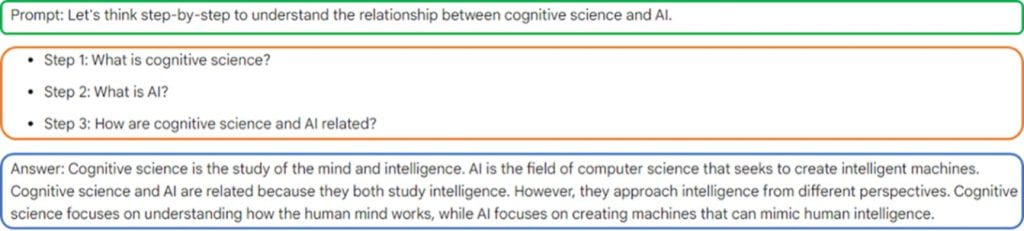

PE is thus a process that involves engaging in a dialogue with the LLM to discover creative and innovative solutions to problems. One effective approach is the “chain-of-thought” (CoT) prompting, which entails eliciting the LLM to provide more in-depth responses by following up on previously introduced ideas. The example shown in Figure 4 was output by Bard after the prompt “provide an example of a chain of thought prompting to be submitted to a large language model”. The green box contains the initial prompt, the orange box represents three subsequent questions, and the blue box represents a potential answer given by the LLM. Another way of CoT prompting can be achieved by starting by setting a topic (e.g. “The Role of Artificial Intelligence (AI) in Education”), then ask questions such as “start by defining Artificial Intelligence (AI) and its relevance in the context of education, including its potential applications in learning, teaching, and educational administration.”, “explore how AI can personalise the learning experience for students, catering to individual needs, learning styles, and pace of progress.”, “discuss the benefits of AI-powered adaptive learning systems in identifying students’ strengths and weaknesses, providing targeted interventions, and improving overall academic performance.”, “examine the role of AI in automating administrative tasks, such as grading, scheduling, and resource management, to enhance efficiency and reduce the burden on educators.” etc.

Variants of CoT prompting can be considered by generating several CoT reasoning paths (see the following articles Tree of Thought Deliberate Problem Solving with Large Language Models and Large Language Models Tree -of -Thoughts.). Regardless of the CoT prompting used, the ultimate goal is to solve a problem in an original and informative ways.

It’s crucial not to overlook AI technologies but rather embrace them, finding the right balance between tasks delegated to AI and those best suited for human involvement. Fine-tuning interactions between humans and AI is key when exchanging information, ensuring a seamless and effective collaboration between the two.

It is a very interesting proposal

thanks!

very good article about AI

thanks for your comment… feel free to email me if you’ve got comments.