Krista Yuen, The University of Waikato

Danielle Degiorgio, Edith Cowan University

Warning – ChatGPT and DALL-E were used in the making of this post.

Experienced AI users have been experimenting with the art of prompt engineering to ensure they are getting the most useful and accurate responses from generative AI systems. As a result, they have created and synthesised techniques to ensure that they are getting the best output from these systems. Crafting an effective prompt, also known as prompt engineering, is arguably a skill that may be needed in a world of information seeking, as the trend of AI continues to grow.

Whilst AI continues to improve, and many systems now encourage more precise prompting from their users, AI is still only as good as the prompts they are given. Essentially, if you want quality content, you must use quality prompts. The structure of a solid prompt requires critical thinking and reflection in the design of your prompt, as well as how you interact with the output. While there are many ways to structure a prompt, these are the three more important things to remember when constructing your prompt:

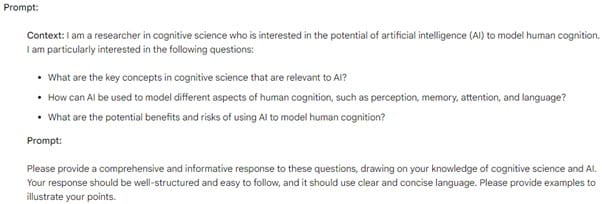

Context

- Provide background information

- Set the scene

- Use exact keywords

- Specify audience

- You could also give the AI tool a role to play, e.g. “Act as an expert community organiser!”

Task

- Clearly define tasks

- Be as specific as possible about exactly what you want the AI tool to do

- Break down the steps involved if needed

- Put in any extra detail, information or text that the AI tool needs

Output

- Specify desired format, style, and tone

- Specify inclusions and exclusions

- Tell it how you would like the results formatted, e.g. a table, bullet point list or even in HTML or CSS.

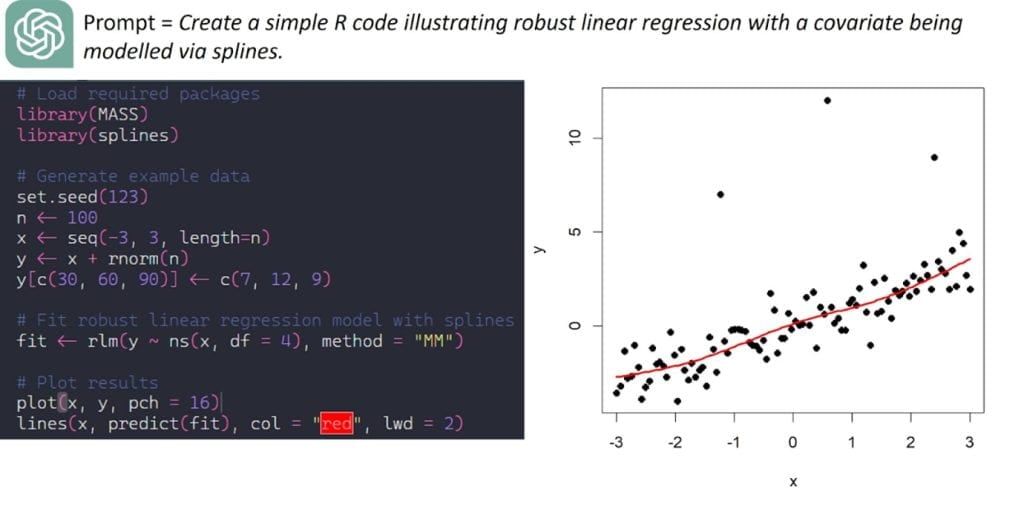

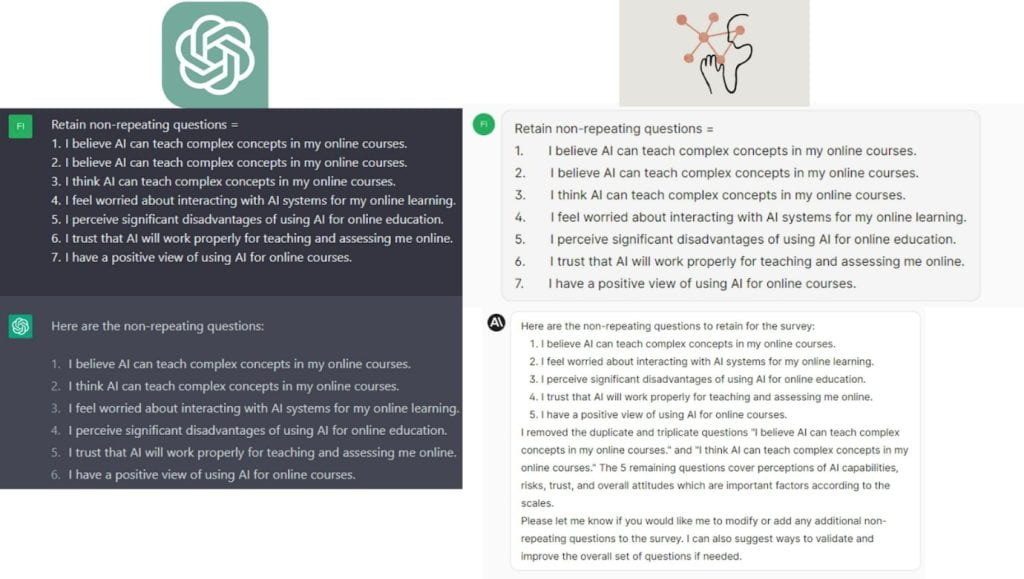

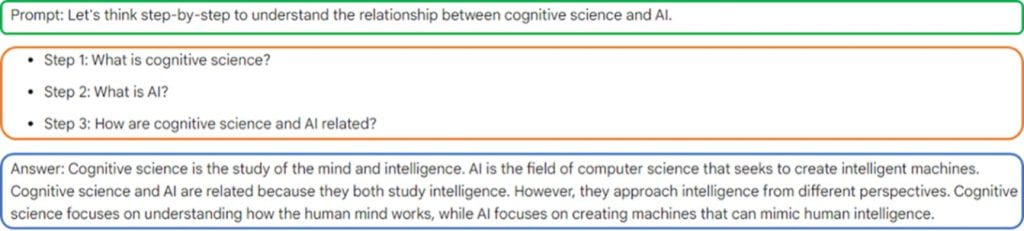

Example prompt for text generation e.g., ChatGPT

You are an expert marketing and communications advisor working on a project for dolphin conservation and need to create a comprehensive marketing proposal. The goal is to raise awareness and promote actions that contribute to the protection of dolphins and their habitats. The target audience includes environmental activists and the general public who might be interested in marine conservation.

The proposal should highlight the current challenges faced by dolphins, including threats like pollution, overfishing, and habitat destruction. It should emphasise the importance of dolphins to marine ecosystems and their appeal to people due to their intelligence and playful nature. It should include five bullet points for each area: campaign objectives, target audience, key messages, marketing channels, content ideas, partnerships, budget estimation, timeline, and evaluation metrics.

Please structure it in a format that is easy to present to stakeholders, such as a PowerPoint presentation or a detailed report. It should be professionally written, persuasive, and visually appealing with suggestions for imagery and design elements that align with the theme of dolphin conservation.

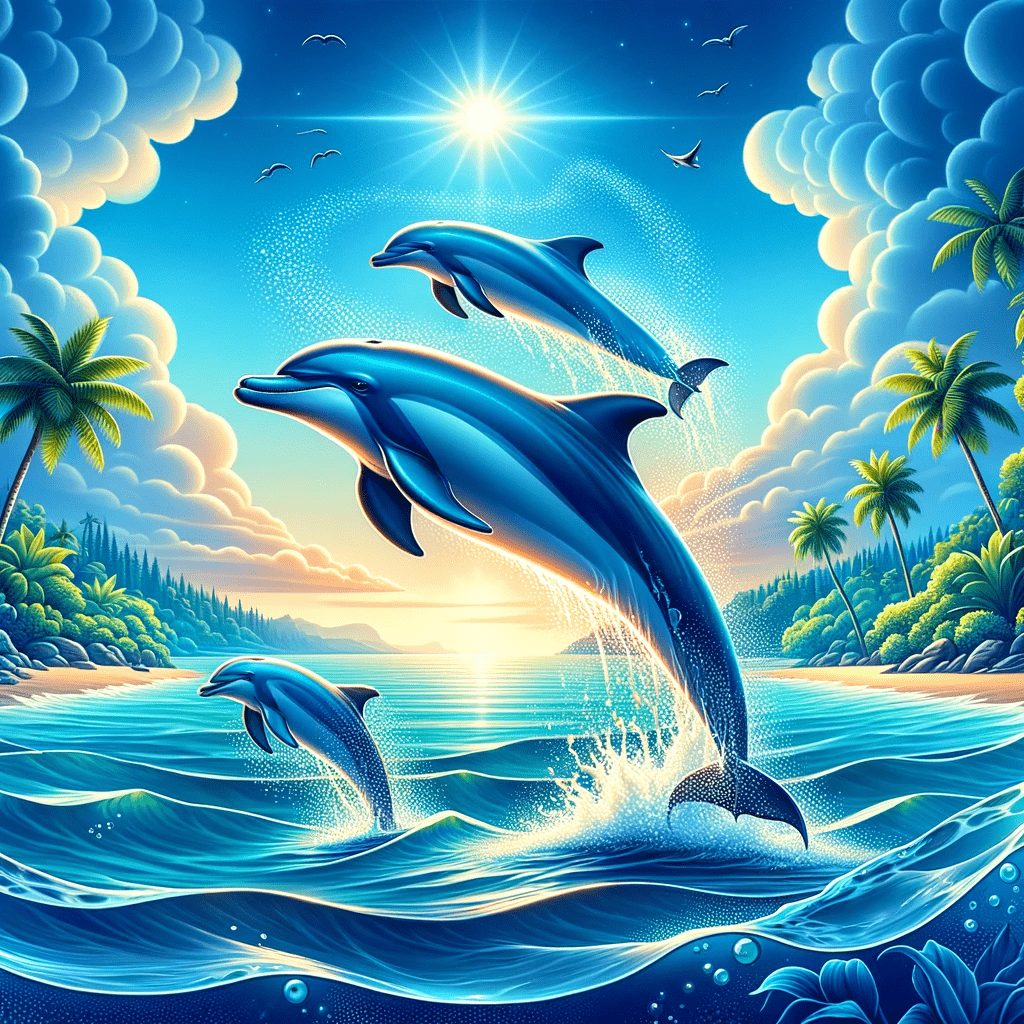

Example prompt for image generation e.g., DALL∙E

Create a captivating and colourful image for a marketing campaign focused on dolphin conservation. The setting is a serene, crystal-clear ocean under a bright blue sky with soft, fluffy clouds. In the foreground, a group of three playful dolphins is leaping gracefully out of the water. These dolphins should appear joyful and full of life, symbolising the beauty and intelligence of marine life.

The central dolphin, a majestic bottlenose, is at the peak of its jump, with water droplets sparkling around it like diamonds under the sunlight. On the left, a smaller, younger dolphin, mirrors its movement, adding a sense of playfulness and family. To the right, another dolphin is partially submerged, preparing to leap. In the background, a distant, unspoiled coastline with lush greenery and a few palm trees provides a natural, pristine environment. This idyllic scene should evoke a sense of peace and the importance of preserving such beautiful natural habitats.

Not getting the results you want?

If your first response has not given you exactly what you need, remember you can try and try again! You may need to add more guidelines to your prompt:

- Try adding more words or ideas that might be needed. What kind of instructions might make your prompt obtain more?

- Provide some more context, like “I’m not an expert and I need this explained to me in simpler terms.”

- Do you need more detailed information that will make your response more relevant and useful?

Want to learn more?

There are a few places you can go to learn more about developing good prompts for your generative AI tool: