Craig Wattam, Rachael Richardson-Bullock

Te Mātāpuna Library & Learning Services, Auckland University of Technology

“The university is at the stage of reviewing its rules for misconduct because they really don’t apply as much anymore.”

– Tom, Student Advocate, on the Noisy Librarian Podcast

Cheating in the tertiary education sector is not new. Generative AI technologies, while presenting enormous opportunity, are the latest threat to academic integrity. AI tools like Chat GPT blur the lines between human-generated and machine-generated content. They present a raft of issues, including ambiguous standards for legitimate and illegitimate use, variations in acceptance and usage across discipline contexts, and little or inadequate evidence of their use. A nuanced response is required.

Fostering academic integrity through AI literacy

Academic integrity research argues pervasively that a systematic, multi-stakeholder, networked approach is the best way to foster a culture of academic integrity (Kenny & Eaton, 2022). Fortunately, this is also the way to foster ethical, critical reflective and skilful use of AI tools, in other words, a culture of AI literacy. Ironically, to support integrity, we must shift our attention away from merely preventing cheating to ensuring that students learn how to use these tools responsibly. Thus, we can ensure that our focus is on learning and helping students develop the skills necessary to navigate the digital age ethically and effectively.

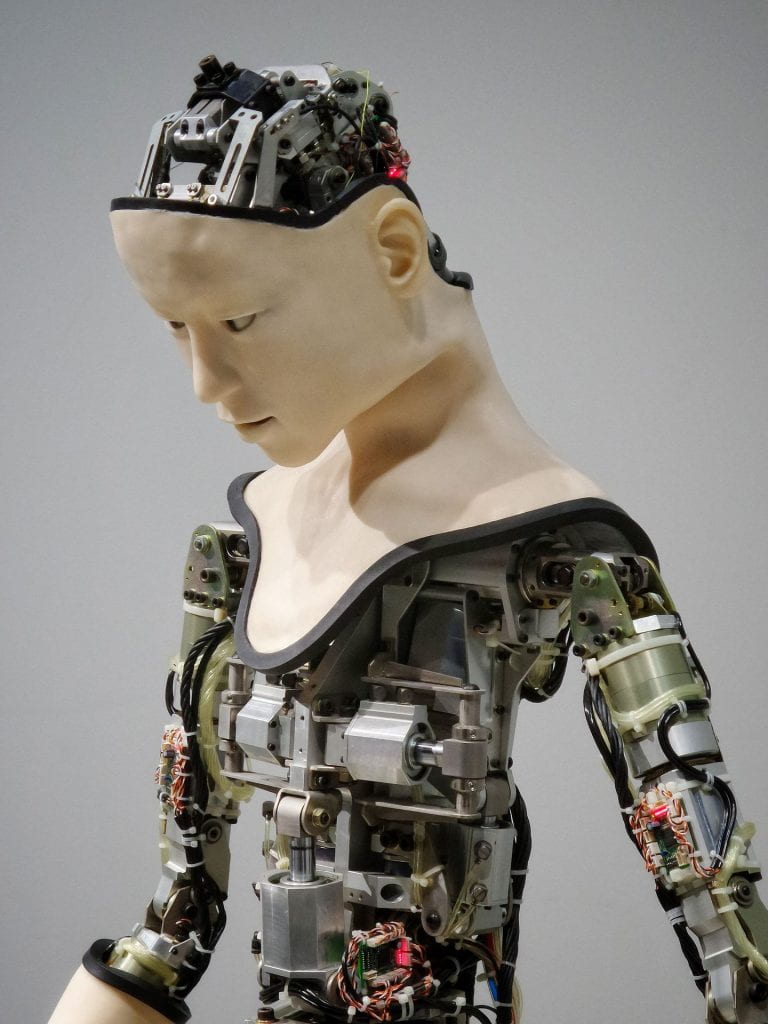

Hybrid future

So, the challenge of AI is an opportunity and an imperative. As we humans continue to interact with technology in high complexity systems, so the way we approach academic work will continue to develop. Rather than backing away or banning AI technologies from the classroom all together, forging a hybrid future, where AI tools play a role in setting students up for success, will benefit both staff and students.

Information and academic literacy practitioners, and other educators, will need to be dexterous enough to respond to the eclipsing, revision, and constant evolution of some of our most ingrained concepts. Concepts such as authorship, originality, plagiarism, and acknowledgement.

What do students say?

This was the topic of discussion in a recent episode of the Noisy Librarian Podcast. Featured guests were an academic and a student – a library Learning Advisor and a Student Advocate. The guests delved into the complexities of academic integrity in today’s digital landscape. Importantly, their discussion underscored the need for organizations to understand and hear from students about how AI is impacting them, how they are using it, and what they might be concerned about. Incorporating the student voice and understanding student perspectives is crucial for developing guidelines and support services that are truly effective and relevant.

Forget supervillains!

Both podcast guests emphasised that few cases of student misconduct involve serial offenders or super villains who have made a career out of gaming the system. Rather than intending to cheat, more closely, misconduct is related to a lack of knowledge or skill. Meantime, universities are facing challenges – needing to adapt their misconduct rules and provide clear guidelines on the acceptable use of AI tools.

Listen to the Noisy Librarian podcast episode Is ChatGPT cheating? The complexities of AI use in tertiary education

Podbean

Or find us on Google Podcasts, Apple Podcasts or I Heart Radio

Reference:

Kenny, N., & Eaton, S. E. (2022). Academic Integrity Through a SoTL Lens and 4M Framework: An Institutional Self-Study. In Academic Integrity in Canada (pp. 573–592). Springer, Cham. https://doi.org/10.1007/978-3-030-83255-1_30